In some cases, evident:

In others, imperceptible:

False positives in simulations are here to stay. It is a reality faced by virtually all organizations that simulate Phishing, Smishing and Ransomware.

The problem is independent of the tool used. The solution? That’s a different story.

How false positives are generated

As explained in a previous post, the simulation emails contain unique links that uniquely identify a user within a campaign. These links are used to detect the interactions that the user performs, and therefore, measure their behavior.

If a software queries these links, one or more times, it will generate false positives on behalf of the user to which the simulation was addressed.

To keep in mind: This same problem is present in any tool that tracks people’s behavior. For example: if your organization uses any marketing tool, I assure you that it also has false positives in its results. Perhaps that statement from the marketing team that was so successful was actually seen by almost no one. Recommendation: do not say anything, as this news usually generates a lot of sadness and sometimes it is better to be happy in a world of lies.

But well, since in your case you prefer to face reality, even if it is painful, let’s continue.

What tools cause false positives?

Short answer

Almost all of them.

Elaborated answer

The following list of tools is not exhaustive, the origin of false positives is very wide and constantly changing.

For this reason, it is neither possible nor practical to generate a complete listing.

In addition, please note that all of the following apply for both corporate and personal devices.

Tools that generate false positives:

- All those security solutions that analyze or intervene in some way in the organization’s e-mails. Here are some examples:

- Anti-spam and anti-phishing filters

- IDS/IPS

- DLP

- Email security gateways

- Antivirus

- Analysis and continuous monitoring

- Archiving and Discovery

- Threat Intelligence

- Etc.

- Any other software present on the device, browser and application (web or mobile) used to consult corporate mail, such as:

- Desktop, web or mobile email applications

- Web browser extensions

- Plugins available in the inbox

- Link preview tools

- Etc.

How to avoid false positives?

In the corporate environment

Within those tools, devices, browsers and applications over which we have control within our organization, we can try to solve the problem through a Whitelist process.

It is important to note that the objective of the Whitelist goes elsewhere, is that the simulation is received by the user. In a simulation we want to measure how users behave and for that we need the mail or SMS to be received in the inbox.

So Whitelist doesn’t have as much to do with the false positive issue, but it can help.

However, if we analyze the possible sources of false positives, it is clear that it is very difficult, or in some cases directly impossible, to implement Whitelist in all the technologies involved.

In addition, there is an important point to keep in mind: even when making Whitelist, there are tools that also analyze e-mails. So in these cases there is no escape from the false positive.

In the personal environment

If the user uses his personal device to check corporate mail, there is very little to do. In any case we should have the user’s permission to manipulate his device to clean all those technologies that can cause the false positives and implement Whitelists on those that should be maintained. Anyway, is this what I am writing, is it even feasible? I don’t think so, but it is the only thing I can think of for this environment.

How to detect false positives?

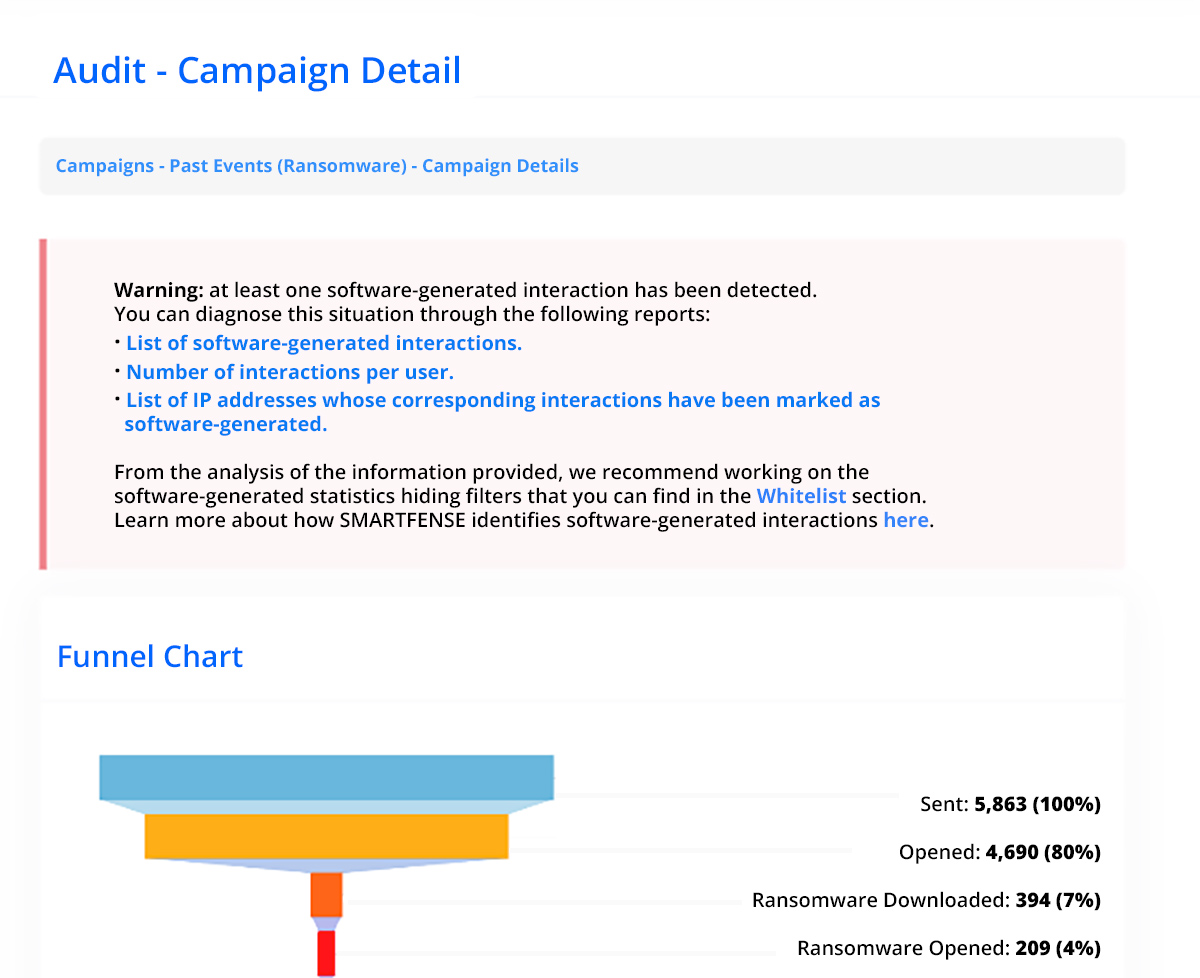

Option 1: let the simulation tool detect them for us

The best option for detecting false positives is to use a simulation tool.that detects them for us and simply alerts us if our campaign is affected.

Better yet:if this tool also provides us with a series of reports that allow us to better understand the origin of the problem, such as, for example:

- List of IP addresses from which false positives are coming from

- Whois information of these IP addresses

- User-agent of false positives

- Trace of the HTTP packet that generated the false positive

- Etc.

Better yet multiplied by 2: If we contract a managed service from a partner that manages SMARTFENSE, we don’t have to worry about anything.

Option 2: manually

If we don’t have a tool that detects false positives for us, things get a little uglier.

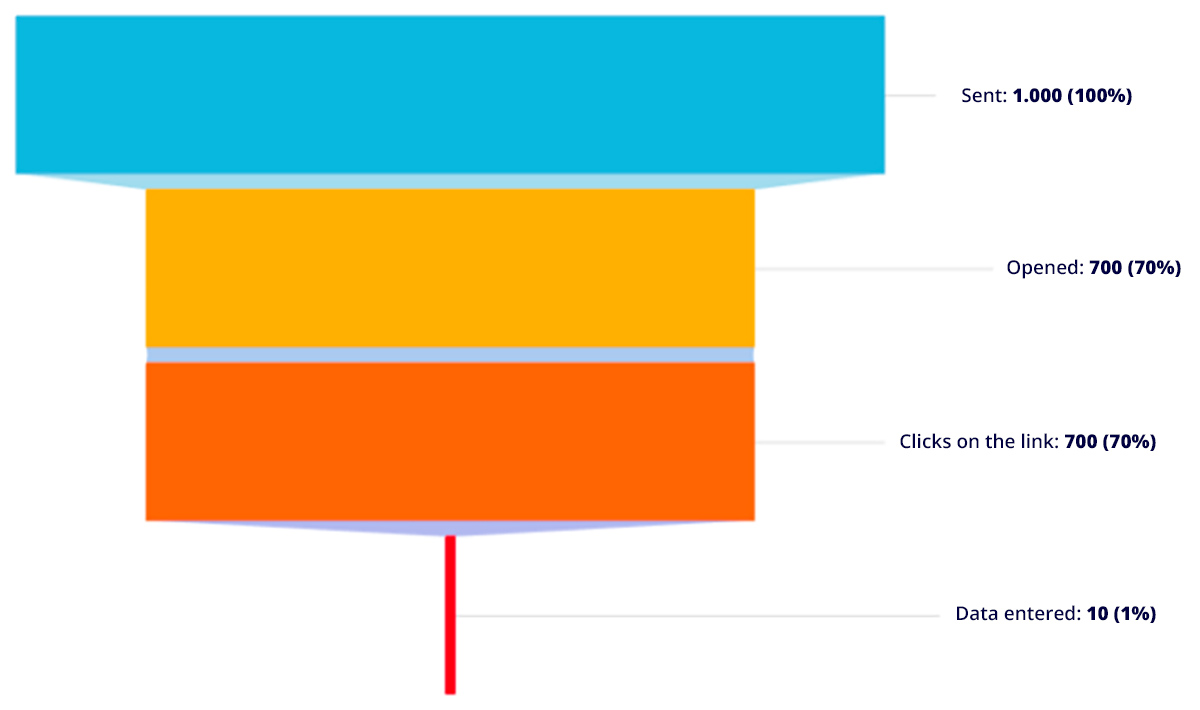

If the problem is obvious:

Sometimes the problem will be more visible, as the results of the campaign will draw our attention. For example:

- Mail sent: 1000

- Open mailings: 900

- Clicks on the link: 900

In cases like this, it is clear that something strange has happened. Now, that campaign is going to the trash because: how can we discern which interactions our users actually made?

In reality we can follow a manual strategy where we search for example the interactions made by each user and observe the range of seconds in which they occurred. So in this way we could rule out:

- Those users who interacted at a date and time very close to the date and time the simulation email was sent: in these cases we can assume that it was actually a tool that analyzed the email moments before delivering it to the user.

- Those interactions that are very close in time: for example, an email opening and a click on the phishing link that took place within 1 second or less of each other.

If we manually perform this work we will surely clean up several false positives.

But the campaign has to go down the drain anyway, as unfortunately we are not going to be able to clean them all up. Considering the number of sources of false positives that exist, with this cleaning we will only be covering a minor percentage.

Each source of false positives generates them at different times (not just upon receipt of the email) and performs different interactions with the emails (not always an open and a click, not always in that order either). That is why there is no logic that allows us to do a reliable manual cleaning.

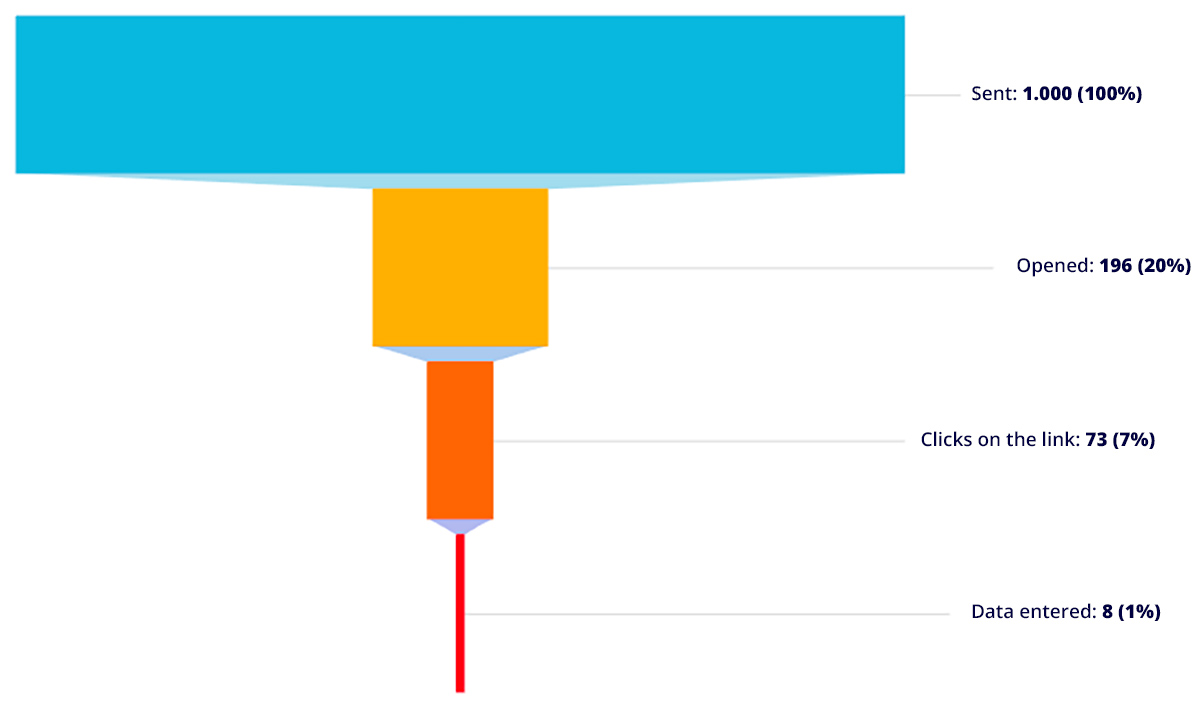

If the problem is not obvious:

In some cases the problem is invisible. And these cases are the worst, since we will take as real results that in reality are partially false. And we are going to find out when someone complains about being accused of an action he or she did not perform.

In these cases it is too late and any decision we have thought of based on the results of our simulations will already be made.

Final thoughts

In 2019 a customer raised the following issue with us: some users denied having fallen for Phishing simulations. While the audit logs indicated actions such as opening emails and clicking on the link, these users claimed that they had not performed these actions.

This was the first encounter we had with false positives. The users had not actually interacted and the statistics recorded on the platform came from a security solution.

From that moment on, we had two possible paths:

- Do the same as our competitors, i.e. tell the customer that the problem was theirs and have them solve it via whitelist.

- Helping the customer to obtain reliable results.

And as always, we went for option 2. From this was born the algorithm for false positive detection and our first success story related to this topic.

The SMARTFENSE detection algorithm covers the vast majority of cases and is constantly evolving. In turn, it is customizable, thus covering the reality of any organization.

Without a feature like this, it is not possible to obtain reliable results in the simulations. And this applies to any organization. For this reason, it is surprising that the major competitors in the Security Awareness market send their clients to Whitelist. Knowing that this is not the solution and leaving organizations with more problems than solutions.

This is my 190th article in the SMARTFENSE’s blog. And I always talked about the challenges of the social engineering world without explicitly recommending our platform.

But this time I will make an exception: If you are going to simulate phishing, use SMARTFENSE. You will save yourself a lot of headaches and get reliable results.

Leave a Reply