Cyber threats are no longer limited to exploiting technical vulnerabilities: increasingly, they take advantage of the weakest link—people.

In this context, Artificial Intelligence (AI) emerges as a revolutionary weapon to strengthen cyber awareness within organizations. But caution is needed: despite its potential, blindly relying on AI can turn a promise into a risk.

The Advantages of AI in Cybersecurity Training

-

Personalized and Adaptive Training

Thanks to machine learning, AI analyzes employees’ performance and behavior, offering tailored content. If a user frequently falls for phishing traps, they will receive additional training focused on that specific area.

Result: greater engagement, more effective learning, and less generic content. -

Automation and Immediate Response

AI-based systems can block suspicious emails in real time, simulate attacks, provide instant feedback, and adapt exercises based on user reactions.

This shortens response times and makes awareness more dynamic. -

Advanced Analysis and Reporting

AI excels at processing large volumes of data: it can detect risk patterns, identify who needs more training, and measure the impact of awareness campaigns.

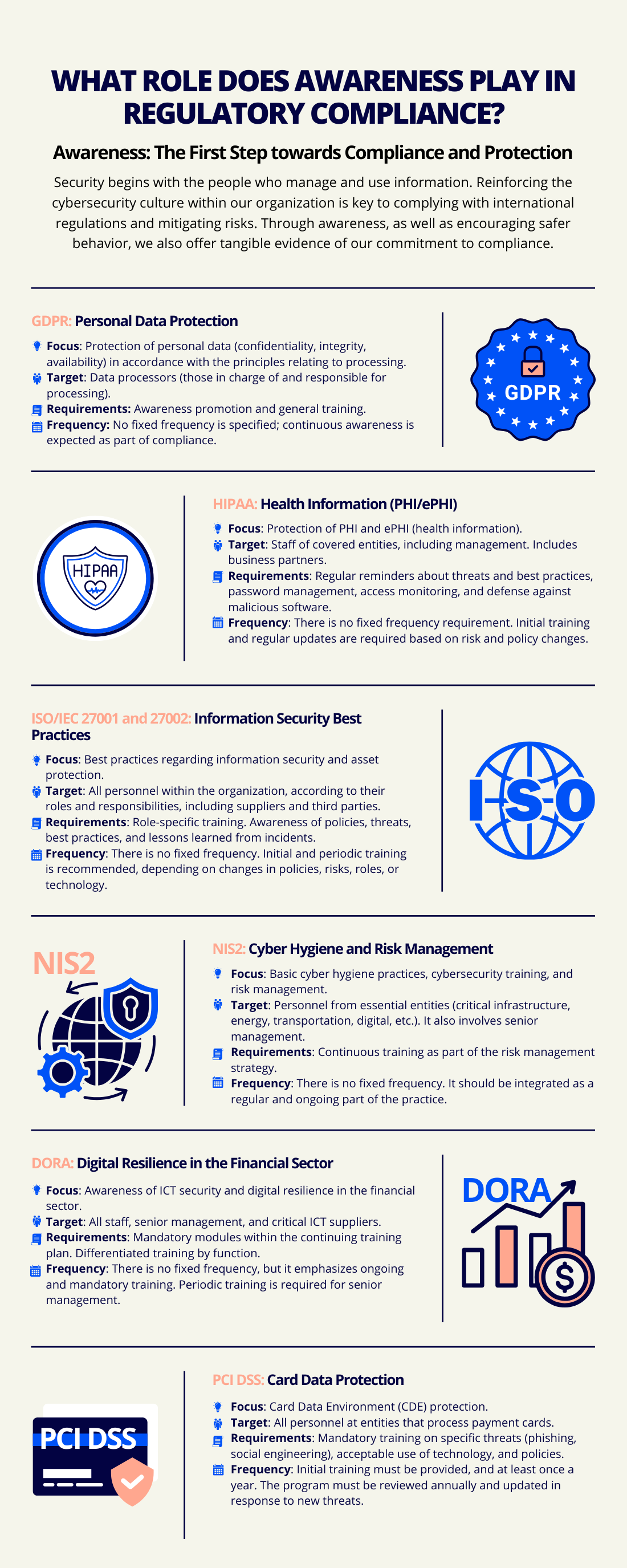

It is also a valuable resource for demonstrating regulatory compliance.

Where AI Falls Short: Risks and Limitations

-

Cultural and Linguistic Context

A message that is effective in Italy may be unsuitable in Spain or Latin America. No matter how sophisticated, AI struggles to capture cultural and social nuances.

The risk: generic, unattractive, or even misleading content. -

The Irreplaceable Role of Humans

AI can generate texts, simulations, or quizzes, but it cannot decide how people should be educated. Human experts must validate, adapt, and give meaning to the content.

It is not AI that educates us; it is we who must educate AI. -

Biases and Inaccuracies

Algorithms trained on incomplete or biased data can amplify discrimination or produce erroneous evaluations of employees. In training, this jeopardizes both worker trust and the effectiveness of the program itself.

-

Adversarial Attacks and False Positives

AI itself can be manipulated. Moreover, if it raises too many false alerts, employees may develop “security fatigue” and even ignore real threats.

The Key: Balance Between Technology and Human Intelligence

AI in cyber awareness is a powerful ally, but not a substitute.

The winning formula is balanced integration: leveraging AI’s speed, analytical capacity, and personalization while keeping human judgment, expertise, and context at the center.

Only then can we design truly effective training programs capable of protecting both people and organizations from increasingly sophisticated threats.

In short: AI alone is not enough to make companies safer. What is needed is AI guided by humans.

Leave a Reply